How Flash and NVMe changes latency for shared storage is a subset from the FCIA Webcast on Nov 1st.

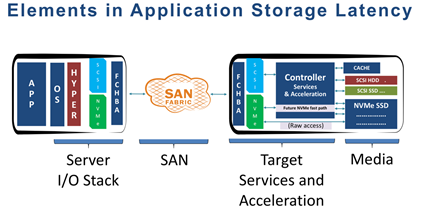

There are many elements involved in networked storage latency

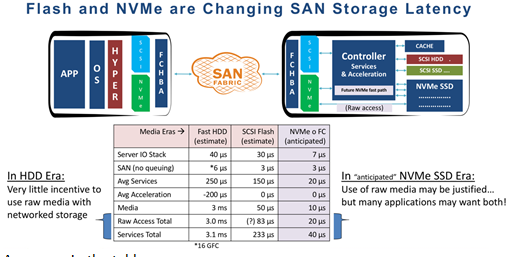

The primary change in latency for shared storage is two-fold

- Flash is an orders of magnitude faster performing media

- The NVMe protocol is designed to take advantage of flash storage media with properties that are more like memory than rotational or mechanical media of the past.

This means that where storage arrays historically have improved storage latency and performance, providing storage services, data protection, encryption and dedupe without adding any noticeable latency. The future, however, is changing quickly with protocols and storage services becoming visible to applications chasing the lowest latency.

Let’s take a look at each element of the end to end shared storage stack as it applies to the application and see what is changing with FC-NVMe.

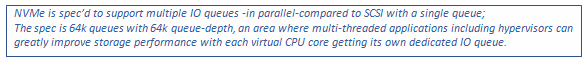

Starting with the server IO stack (on the left) here we see performance improvement with the NVMe stack compared to SCSI for a couple of reasons;

- The NVMe protocol is much more light weight compared to SCSI

- The SCSI stack has morphed over three decades and in the process become inefficient with more than hundred command sets including commands to for example control tape robots as well as writing/reading blocks from a device. This is compared to NVMe, written in today’s day and age, with the sole purpose of communicating with solid state storage media.

- We have not yet seen applications start to take advantage of multiple IO-queues, when this happens NVMe will drastically improve IO performance.

Passing through the HBA, across the Fibre Channel (FC) network there is no difference in speed between SCSI and NVMe over a FC network -having that said- new enhancements to the FC standard are happening for FC-NVMe first and then backported to be supported for SCSI.

It is important to point out that all the storage network services -as you know them- apply to FC-NVMe including Nameserver, zoning, frame-level network visibility etc.

As we enter the storage array the SCSI and NVMe driver stack comparison somewhat apply; though many array vendors generally have more efficient driver stacks compared to the host side OS drivers.

The main difference is going to be in the controller and services layer.

As we see in the table:

Storage services fall in the 150 micro-seconds range

Now with storage media dropping to ~10 micro-seconds range with latest NVMe media technologies.

The anticipation is that storage arrays in the NVMe-era will provide an accelerated NVMe path or maybe even direct media access for applications where the absolute lowest latency is a priority far above any storage services,

Having that said, I think we can agree that many or most applications in the enterprise today require at least data protection.

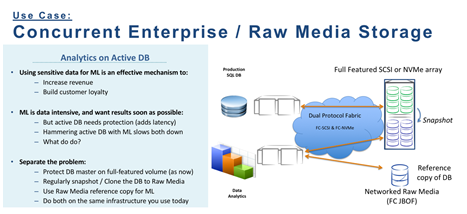

Now let’s take a look at a use case example which customers have discussed.

This use case represents an application with appetite for the absolute lowest latency -however the trade-off in this example is in data protection.

The use case at a high level is the ability to run real-time (or near real-time) analytics on a transactional data base, while applying Machine Learning (ML) in the process without impacting the transactional performance of the data base.

Illustrated in the diagram the data base is protected through storage services and snapshot copies are performed and offloaded in the back ground to NVMe Name spaces with direct access on the same array or it could be copied to a NVMe JBoF (just a bunch of Flash) and the machine learning analytics is performed on the DB copy -with latencies sub 50 microseconds range.

For more information take a look at the FCIA webcast.

#FibreChannelSAN#NVME#FC-NVMe#storagenetworks#BrocadeFibreChannelNetworkingCommunity#flash#ssd#FCIAWebcast